Test AI on YOUR Website in 60 Seconds

See how our AI instantly analyzes your website and creates a personalized chatbot - without registration. Just enter your URL and watch it work!

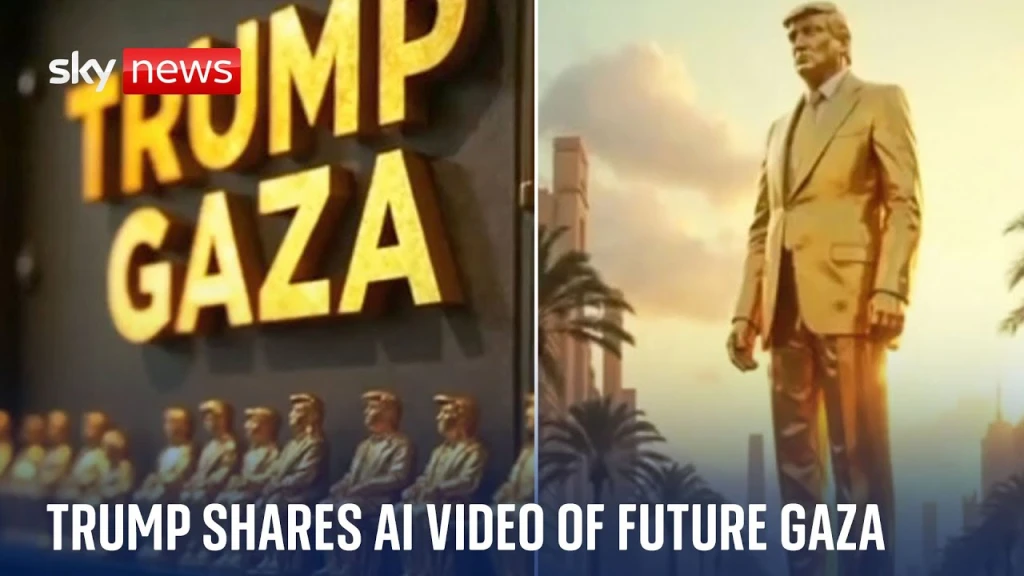

1. Introduction: The Rise of Deepfake Technology in Politics

One of the most recent and controversial examples is the “Trump Gaza AI Video”, which allegedly features AI-generated content related to Donald Trump’s stance on Gaza. This blog explores how deepfake technology affects politics, elections, and public opinion, and the growing need for regulation and media literacy.

2. What is the Trump Gaza AI Video?

The Trump Gaza AI video reportedly showcases AI-generated footage of former U.S. President Donald Trump discussing the Gaza conflict.

It features realistic deepfake visuals and AI-generated speech, making it difficult to distinguish from authentic footage.

The video has been circulated widely on social media platforms, news sites, and political forums.

2. Who Created It and Why?

The origins of the video remain unclear, with speculation that it was created by political groups, activists, or misinformation networks.

The purpose could range from political manipulation and propaganda to satire or AI awareness campaigns.

3. Public Reaction and Media Response

Some viewers believed the video was real, leading to heated political debates and misinformation spread.

Media organizations and fact-checkers have worked to debunk the video and clarify its AI-generated nature.

The controversy highlights the dangers of deepfakes in influencing public perception.

3. How Deepfake Technology is Reshaping Political Communication

Deepfake technology enables highly convincing fake speeches, policy endorsements, and fabricated statements.

AI-generated videos can be weaponized to sway public opinion, damage reputations, or create confusion before elections.

2. The Threat to Democracy and Trust in Media

The spread of AI-generated content erodes trust in traditional media and political institutions.

Politicians and public figures can deny real events by claiming they are deepfakes (the “liar’s dividend”).

Deepfakes contribute to polarization and manipulated narratives in global politics.

3. The Impact on Public Perception and Elections

AI-manipulated content can mislead voters, influence campaigns, and alter electoral outcomes.

Political opponents may use deepfakes to smear candidates or create fake policy endorsements.

Rapid distribution via social media amplifies misinformation, making fact-checking difficult.

4. How Can We Combat Deepfake Misinformation?

Companies like Google, Microsoft, and OpenAI are developing AI-powered deepfake detection algorithms.

Fact-checking organizations use machine learning to verify video authenticity.

2. Media Literacy and Public Awareness

Educating the public about deepfake technology and misinformation tactics is essential.

Platforms should include warning labels on suspected AI-generated content.

3. Government Regulations and Legal Frameworks

Countries are considering laws to regulate AI-generated misinformation and deepfakes.

The EU AI Act and U.S. legislation aim to increase transparency and accountability.

Social media platforms must enforce stricter policies against misleading AI-generated content.

5. The Future of Deepfake Technology in Politics

Political campaigns are already using AI to create customized video messages and automated responses.

Ethical concerns arise when AI is used to alter candidate messaging or mislead voters.

2. AI’s Role in Political Debates and Disinformation Warfare

AI-generated videos could be used to simulate political speeches, debates, or fabricated policy statements.

Governments may use AI for propaganda, psychological operations, and digital warfare.

3. The Need for Ethical AI Governance

AI’s growing role in politics necessitates ethical AI development and regulatory oversight.

Collaboration between tech companies, governments, and fact-checkers is crucial for maintaining election integrity.

6. Conclusion: The Double-Edged Sword of AI in Politics

To combat the spread of AI-generated misinformation, we must prioritize transparency, regulation, and media literacy. As deepfake technology continues to evolve, the challenge remains: How can we balance innovation with truth and accountability in an AI-driven world?